We’re often asked how our technology consistently delivers such a high degree of accuracy. In this post, we explain the basic concepts behind our validation process, and illustrate with real-life examples.

Every Credit Analyst has suffered the dread of walking into an Investment Committee meeting, only to have their credibility thrown into doubt due to an arcane mistake in the financial model. Human error is a common issue in manually built credit models - sometimes due to inaccurate data entry by the Credit Analyst, sometimes due to misreported data by the Company, and sometimes both.

In fact, research suggests that cell error rate in manually built spreadsheets generally ranges between 1-2%. In financial models containing thousands of data points, a 1-2% error rate can equate to hundreds of cell errors - and that’s a big margin of error.

At Cognitive Credit, we firmly believe that technology is best applied to the challenges of data accuracy.

In the third instalment of our financial data extraction series (1. How our tech makes financial data extraction easier; 2. Why financial restatements matter) we take a closer look at the difficulties involved in ensuring accurate data delivery, and how our technology is smart enough to deal with them.

Financial data accuracy: Our solution

Source documents can contain any number of potential extraction issues - from poor table layouts, bad column formatting, inconsistent terminologies or poor quality page scans to name just a few - that are enough to give even the most complex automated data extraction technologies trouble. In our previous post we explained how our technology extracts financial data and deals with some of the aforementioned challenges.

Once the data is extracted from financial reports, the resultant model needs to be built ensuring robust accuracy across every data point. A technological solution that resolves the challenge of accuracy relies on two key pieces of information:

- The original value of a data point in every document in which it is reported

- The known accounting relationship that supports that data point

As any financial analyst knows, companies report one period’s worth of numbers across multiple financial statements. For example, Income Statement numbers for FY20 will often be reported in FY20, FY21 and even FY22 financial reports. It’s this repetition of a value that facilitates our ability to cross-check the value of a specific data point.

Additionally a value can be validated by applying basic accounting concepts. For example, if we have a reported value for Gross Profit, we can also calculate the number by using a simple formula:

Gross Profit = Revenue - Cost of Goods Sold (COGS).

It’s this combination of automated validation - executed across millions of data points across multiple documents in mere minutes - that enables us to deliver the most accurate fundamental credit data sets.

How it works: Real-life examples

To illustrate this process in action, we’ve pulled a couple of real-life examples directly from our web application. In the first example, we’ll look at how our technology has validated a specific FY20 Operating Earnings datapoint for a company in our US HY coverage universe:

In the above example, we can see that the published value in the credit model is 1,179. By using the Audit Panel, we can see that the value has been extracted and reconciled in the following ways:

- The company reported the same value in [4] financial documents, including a preliminary statement

- The summation of the values in the supporting accounting concept confirms this value i.e. EBIT = Revenue + COGS [Gross Profit] + Operating expenses

As a result, we can confidently reconcile the value to be 1,179. And it’s through this iterative series of calculations - performed at a scale and speed not possible by a human - that we guarantee financial data accuracy to 99.9%.

What happens when a value is inconsistently reported?

Occasionally, a value is either inconsistently reported across multiple documents or doesn’t match the sum of its known components. Whilst a human analyst might struggle to spot such an error, our technology is built specifically to identify such mistakes.

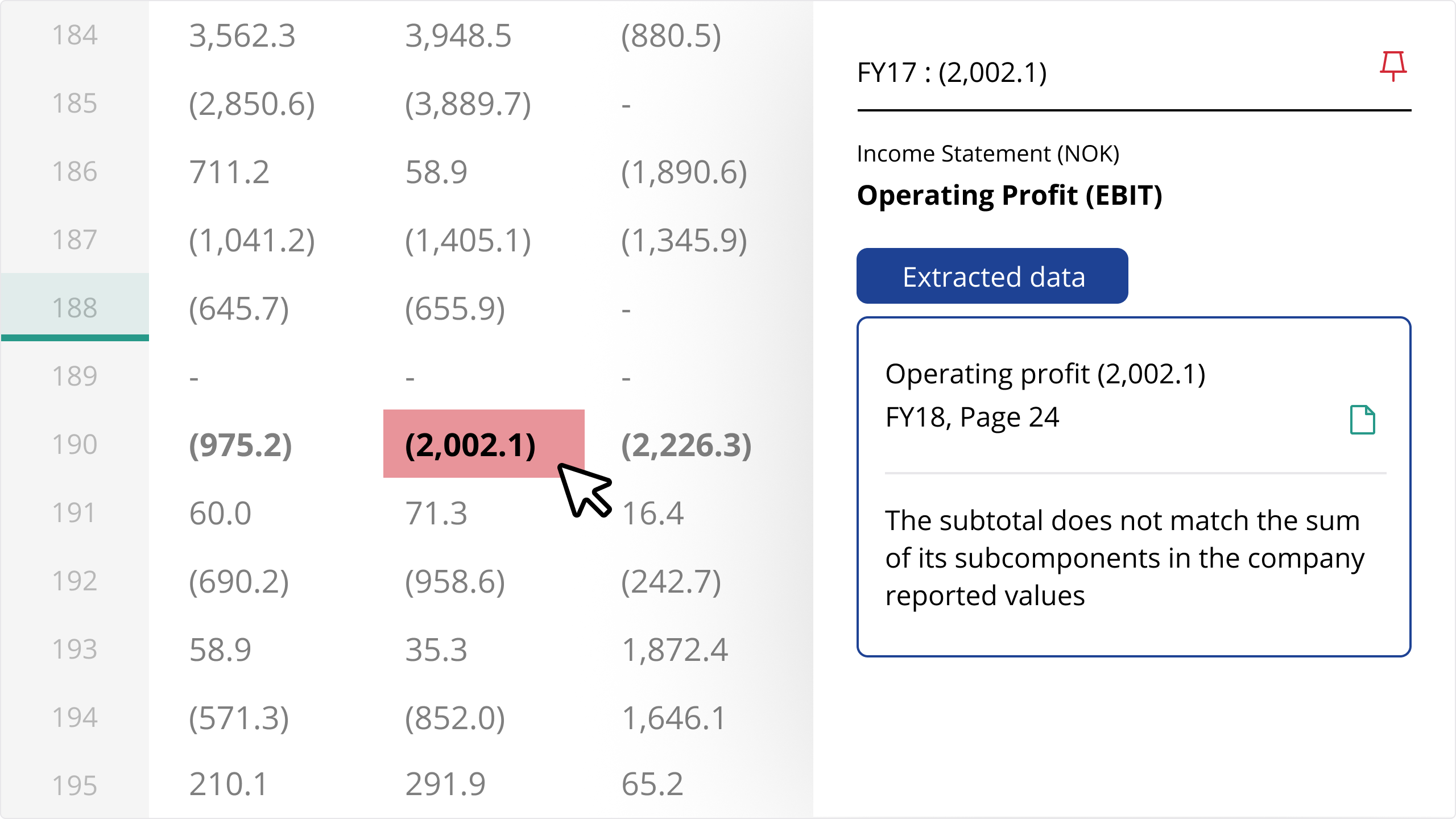

In the below example, our technology successfully identified an erroneous value reported in a particular income statement, where the Operating Profit (EBIT) components had been incorrectly summed:

By highlighting inconsistencies within the Financials, the value in question is instantly surfaced. Our Audit Panel then gives a more detailed explanation of the inconsistency for full transparency, allowing the end user to seamlessly navigate back to the source document.

Whilst our technology doesn’t automatically correct the inconsistency (it is an officially reported value, after all) we highlight the error such that the end user can decide how best to proceed.

The most accurate data for your analysis

These examples are not an exhaustive list of challenges, but they are representative of the difficulties in extracting and validating financial data for use in our credit models. Our technology has been specifically designed and developed to build financial models accurately, quickly and with a high degree of transparency.

To start using the market’s most accurate data sets in your credit analysis, request a demo today